Providers Tap Data Lakes to Boost Patient Care

When David Higginson arrived at Phoenix Children’s Hospital to serve as CIO in 2011, the organization analyzed much of its data in silos. Clinicians and staff developed reports using information in the facility’s transactional systems, but the data was not widely available between its disparate departments.

Concerned with the lack of information flow, Higginson developed a plan to bring the disparate data together, building the foundation for what would become the hospital’s data lake. He and his team stood up a Microsoft SQL server and its extraction, transformation and load packages, and they also launched Microsoft’s reporting services application.

“We pulled data from 40 systems — everything from the surgery system to the scanned medical records to the general ledger and payroll,” says Higginson, who is now also the hospital’s executive vice president and chief administrative officer. “Anyplace we could tap data, we did.”

Today, the Phoenix Children’s data lake plays a key role in patient care, storing information for medication and patient analysis. Data lake deployments by healthcare facilities nationwide allow providers and researchers quick access to information from multiple channels to help organize and improve patient care.

Data Lakes Provide Big Data Flexibility

Early health data warehouses used more narrowly focused financial and clinical data sets, says Cynthia Burghard, a research director at IDC Health Insights. But delivering value-based healthcare requires tapping other information sources to more closely follow what is happening with patients, she notes.

Such nontraditional health data originates in many systems, some of which are external to a hospital, Burghard says. That’s where the gains of a data lake come into play. Health systems are starting to recognize its value, she says.

“If I want to do research on asthma, I can go to the lake and pull the data I want and then do my analysis on it. It makes sense that CIOs trying to do new work and bring in new data are frustrated with older setups.”

That situation recently played out at Chicago-based University of Illinois Hospital Health Sciences System (UI Health). Executives increasingly demanded analytics but grew frustrated with the limitations of the system’s data warehouse. Adding new data elements took five or six months, and adding information from new applications was also difficult, recalls UI Health's Vice President of IT and CIO Audrius Polikaitis.

“That got us thinking that there must be a better way,” he says. “We became fascinated with Hadoop as a Big Data platform and realized we wanted to try the notion of a data lake.”

The idea that UI Health could ingest data without knowing how it would be used immediately intrigued Polikaitis. As use cases dictate, he and his team can create dashboards or offer analytic insights.

“I don’t need to do data modeling up front,” Polikaitis says. “We can take a modular approach and add compute and storage nodes as we need them. It’s a much more flexible and scalable approach to Big Data.”

UI Health’s proof of concept merged data from its electronic health record (EHR) with a patient throughput system and a patient acuity system to build patient flow dashboards for nursing executives. Other projects in the works involve dashboards for ambulatory patient throughput and emergency department volume and operations.

Building Safety Nets and Guardian Angels with Big Data

Like UI Health, Phoenix Children’s built its data lake infrastructure without an initial plan for use.

“It was a ‘build it and they will come’ mentality,” Higginson says.

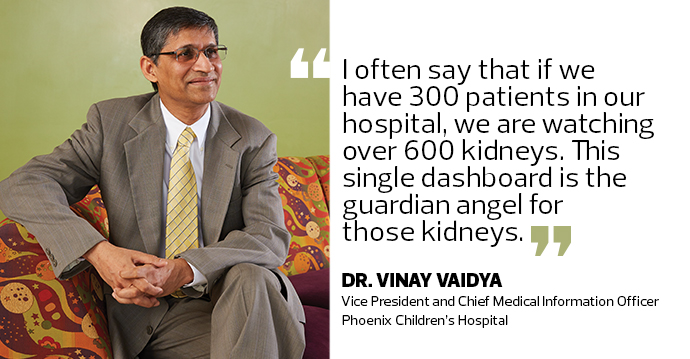

In recent years, the data lake completely changed the hospital’s culture, says Dr. Vinay Vaidya, Phoenix Children’s vice president and chief medical information officer.

For instance, the data lake helps clinicians deliver more accurate medication dosing. After analyzing more than 750,000 orders placed at the hospital to understand dosing patterns, Phoenix Children’s created an algorithm that provides an electronic safety net in the form of decision support alerts.

“Now when people bring issues to a meeting, instead of proposing solutions right away, they say ‘let’s look at the data and really try to understand what is happening,’” Vaidya says.

Phoenix Children's Hospital. Photography by Steve Craft.

Phoenix Children’s also developed a dashboard for kidney care by pulling data from multiple systems in the lake. Kidney injury caused by powerful drugs is a rare but unintended consequence of treating conditions such as cancer and infections.

“This is a perfect case where the combination of data and analytics can be your eyes and ears around the clock looking at the radiology system, pharmacy system, lab system and EHR — bringing all of that data together to alert you,” Vaidya says. “I often say that if we have 300 patients in our hospital, we are watching over 600 kidneys. This single dashboard is the guardian angel for those kidneys.”

Montefiore Makes the Link to Meaningful Data

Montefiore Health System in New York refers to the Big Data platform it’s creating as a “semantic” data lake, meaning the system both maintains large volumes of data and links that data to metadata and ontologies that make the information meaningful for computers. It’s also more accessible to machines as well as people, says Dr. Parsa Mirhaji, director of clinical research informatics at the Albert Einstein College of Medicine and Montefiore Medical Center-Institute for Clinical Translational Research.

Creating an environment where researchers can experiment and learn from the data requires a level of sophistication beyond just a massive information repository, Mirhaji says.

“It requires consistent management of metadata, terminology management, ontology management, linked open data and modeling, as well as the kinds of automated algorithms that can use these resources efficiently to solve difficult problems,” he says.

Montefiore has worked with several vendors, including Intel and Cisco Systems, to create its platform. The health system handles metadata management and integration of multisource data to support artificial intelligence and deep learning. Data scientists can subscribe to analysis-ready information and aren’t required to know about the data pipelines or back-end processes.

Let’s say a clinician wants to examine how many times certain patients were in the hospital, their diagnoses and the short- and long-term outcomes of those patients. In the past, “it would take two or three pages of SQL code in an enterprise data warehouse to get at that information,” Mirhaji says. “But it only takes four lines of code in the semantic data lake, because you don’t have to say where the data is, how it is linked, and what the computer should do to get that data and format it appropriately for visualization or further analysis. The semantic data lake figures it out automatically.”