Technology Lays a Foundation for Precision Medicine Success

Success in precision medicine, in which treatments are tailored to specific patients, hinges on a healthcare provider’s ability to individualize diagnoses and remedies by linking genomic and molecular data with clinical information gathered in electronic health records.

Key to the effectiveness of such care is a technology infrastructure that both supports robust data storage and provides enough flexibility to enable collaboration between a variety of medical professionals. For instance, the development of a genomic data warehouse at the Mayo Clinic’s Center for Individualized Medicine in Rochester, Minn., required a cross-functional team of 10 staffers, in addition to more than a dozen other developers who provide pipeline, interfaces and other bioinformatic tools, says Mathieu Wiepert, an IT section head for the organization.

Wiepert and his colleagues built a tool that runs on a host of Oracle technologies, including an Exadata Database Machine, powered by an Intel Xeon processor; a ZFS Storage Appliance for backups and file staging; and a WebLogic Server. The Exadata Database Machine, in particular, enables the organization to lower its storage footprint thanks to better compression and less disk scan input/output.

“We take an agile approach,” Wiepert says. “The whole notion of agile is to ensure that the stakeholder is there with you. Constant communication and flexibility are critical.”

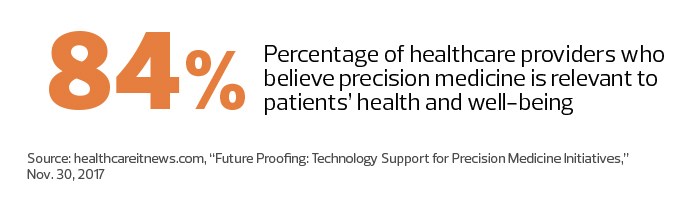

As precision medicine’s prominence grows, healthcare organizations from coast to coast are implementing foundational technology, laying the groundwork for evolving patient treatment.

Providers Eye Flexible PMI Infrastructure

Fifty-six percent of providers surveyed by HIMSS Analytics plan to use a combination of in-house and third-party resources to support precision medicine efforts in the next two years.

That includes Mayo, says Wiepert, who emphasizes that there aren’t vendor solutions for everything the organization tries to accomplish. “We end up doing a lot of homegrown algorithm development and IT tooling,” he says.

Mayo also collaborates with other health systems to share internally developed workflow applications, and plans to work with cloud providers in the future because of the data and storage computing resources required.

Like the Mayo Clinic, the Inova Translational Medicine Institute in Falls Church, Va., supports both large-scale research and clinical genomics testing. That means it requires a more responsive infrastructure.

“In the clinical setting, you have deadlines and laboratory standards,” says Aaron Black, chief data officer at ITMI. “We wanted to do this in a secure and compliant way, as well as determine the most efficient and cost-effective method. We wanted to think of it as a true business rather than just a research venture, which requires a different mindset.”

ITMI studied researchers’ and clinicians’ expectations of turnaround time and compared the hourly cost of cloud services with building a large system onsite. It chose to develop a hybrid solution that combines a public cloud with private, on-premises tools and storage from several vendors, including NetApp.

The setup enables Inova, which supports 2 million patients annually across six hospitals, flexibility in its efforts. “When we notice demand increasing, we ramp up internal systems to support our traditional clinical testing,” Black says.

Black, who is charged with pulling together a team with the skills to develop and manage the infrastructure, calls finding the right people with the right skills a challenge.

“These are some of the biggest systems that I have ever managed, and you have to have different skills for each,” he says. “We have to translate what scientists and researchers are trying to do into the infrastructure needs of the organization. It is definitely a team sport. You can’t have only really smart infrastructure people or really smart data people or scientists; you need them all working together toward the same goal.”

Disruptive Medicine Needs Disruptive Infrastructure

Although the Scripps Translational Science Institute in La Jolla, Calif., is a leader in translational genomics, its precision medicine focus also includes creating digital mobile apps to gather patient-generated data that can be combined with genomic and lab data in new ways. That leads to infrastructure challenges that differ from the challenges of building genomic data warehouses.

Despite its promise, efforts to implement precision medicine can be slowed by the limitations of the current systems of care, which are not necessarily designed to take advantage of newer digital technology, says Dr. Steven Steinhubl, STSI’s director of digital medicine. Using a clinical study involving frequent blood pressure measurement as an example, he notes that clinicians don’t know what to do with all the new data.

“Any solution that is going to change the practice of medicine is going to require a brand-new data infrastructure and a lot more use of clinical decision support and alerting systems,” Steinhubl says. “Systems of care must be built around the understanding that there will be more data that has to be explained to individuals. It is not something you can accomplish during a seven-minute visit when you are clicking away on an EHR.”

Both STSI and the Mayo Clinic are participants in the National Institutes of Health All of Us Research Program, an effort that aims to collect and share health data on 1 million U.S. residents to advance precision medicine. Mayo will house all of the biospecimens for the project, Wiepert says.

Seeking a Digitally Scalable Infrastructure

Steinhubl calls All of Us one of the most amazing research programs undertaken.

“What makes the effort unique and applicable to care is that all the information we gather, we are giving back to participants in a way they can understand,” he says. “We need to appreciate how important that is and how difficult it is to do in a scalable way.”

Even something as simple as returning a blood pressure value to an individual is less straightforward than people realize, Steinhubl says. Whether a reading is considered normal depends on age, diagnoses and comorbidities.

“We need to figure that out in a way that is digitally scalable,” Steinhubl says. “We can’t call a million people and follow up with them on their blood pressure value or their genomic information. It goes beyond precision data to precision implementation of that data.”

Testing the Wearable Waters for Precision Medicine

As part of the National Institutes of Health All of Us Research Program, the Scripps Translational Science Institute will provide up to 10,000 Fitbit Charge 2 and Fitbit Alta HR devices to a representative sample of volunteers for a one-year study on how the devices could be incorporated more broadly into the research program.

One goal of All of Us is to pull together all electronic health record data, genomic information and proteomic information, as well as survey data and continuous physiological data. Much of that will come from wearable sensors or sensors in the home, says Dr. Steven Steinhubl, STSI’s director of digital medicine.

“The major impetus behind the Fitbit pilot is understanding how we incentivize individuals to wear the sensors,” he says. “It is one thing to give a marathon runner a Fitbit, but how do you get other people to wear a sensor device? What kind of motivations and incentives do you offer, and what kind of information do you return to them that is valuable to them?”